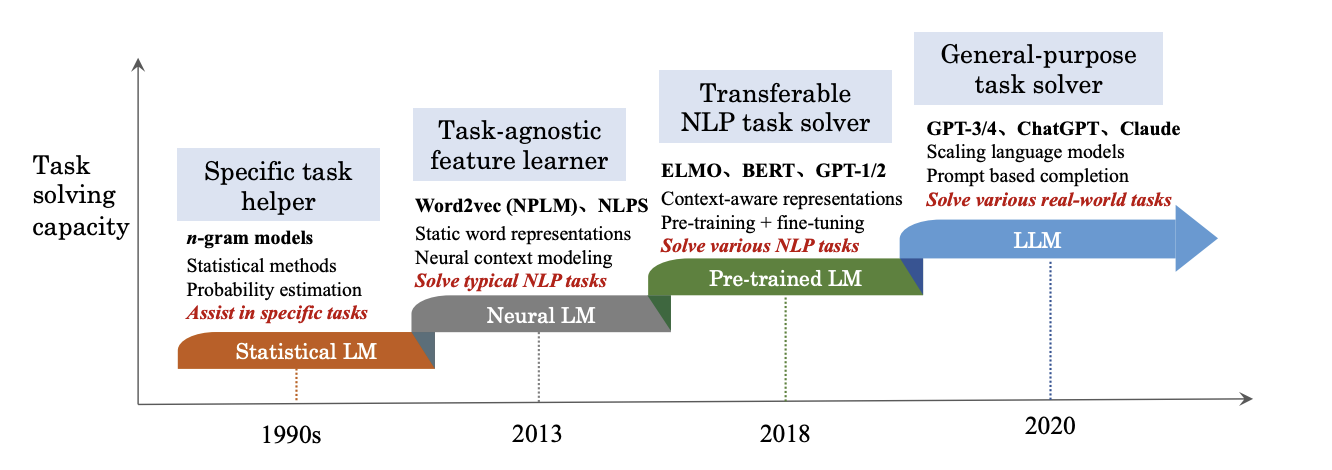

2. History of LLMs in the Broader AI Context

Timeline and Milestones in LLM Development

-

Early Foundations (1950s - 2000s)

- 1950s: AI foundations laid with early research on neural networks and computational linguistics.

- 1980s: Introduction of backpropagation, a critical algorithm for training neural networks. This paved the way for deeper, more complex models.

- 2000s: Development of statistical language models and traditional NLP approaches (e.g., n-grams, Markov models), which were foundational but limited in complexity.

-

Neural Network Breakthroughs (2010 - 2015)

- 2010: Neural networks gain traction for NLP tasks, introducing models like Word2Vec (2013), which provided word embeddings and contextual understanding of language.

- 2014: Introduction of seq2seq models (sequence-to-sequence), allowing machines to perform complex tasks like translation. These models, however, struggled with context in long sequences.

- 2015: Emergence of attention mechanisms in models like Transformer, which allowed models to “focus” on specific words in a sentence, improving context handling.

-

The Rise of Transformers and Pre-trained Models (2018 - 2020)

- 2018: Introduction of BERT (Bidirectional Encoder Representations from Transformers) by Google, which enabled models to learn bidirectional context, enhancing performance across various NLP tasks.

- 2019: OpenAI releases GPT-2 (Generative Pre-trained Transformer 2), a large transformer-based model with significant language generation capabilities. This sparked major advancements in Generative AI.

- 2020: OpenAI introduces GPT-3, featuring 175 billion parameters, marking a leap in scale and performance and showcasing LLMs' ability to generate text that is nearly indistinguishable from human writing.

-

Expansion and Specialization (2021 - Present)

- 2021: Models like T5 (Text-To-Text Transfer Transformer) further enhance versatility by converting NLP tasks into a unified text-to-text format.

- 2022: Focus on model efficiency and responsible AI practices emerges, with emphasis on fine-tuning, domain-specific LLMs, and reducing energy costs.

- 2023: Major LLM providers like OpenAI, Google, and Meta focus on expanding multilingual capabilities and ensuring models are safer and more controllable.

Significance of LLMs in AI Development

The evolution of LLMs is significant because it represents a transition from task-specific AI to generalized, multi-purpose models capable of understanding and generating language at a human-like level. LLMs have catalyzed developments in various fields due to:

- Scalability and Accessibility: LLMs support many NLP tasks without needing to be retrained for each specific application.

- Improved Human-Machine Interaction: The ability to interpret natural language enables applications like virtual assistants and chatbots.

- Versatility in Application: These models can be fine-tuned for specific industries, making LLMs versatile tools across healthcare, education, customer service, and more.