Retrieval-Augmented Generation (RAG) on Documents

Overview of RAG on Documents

Definition:

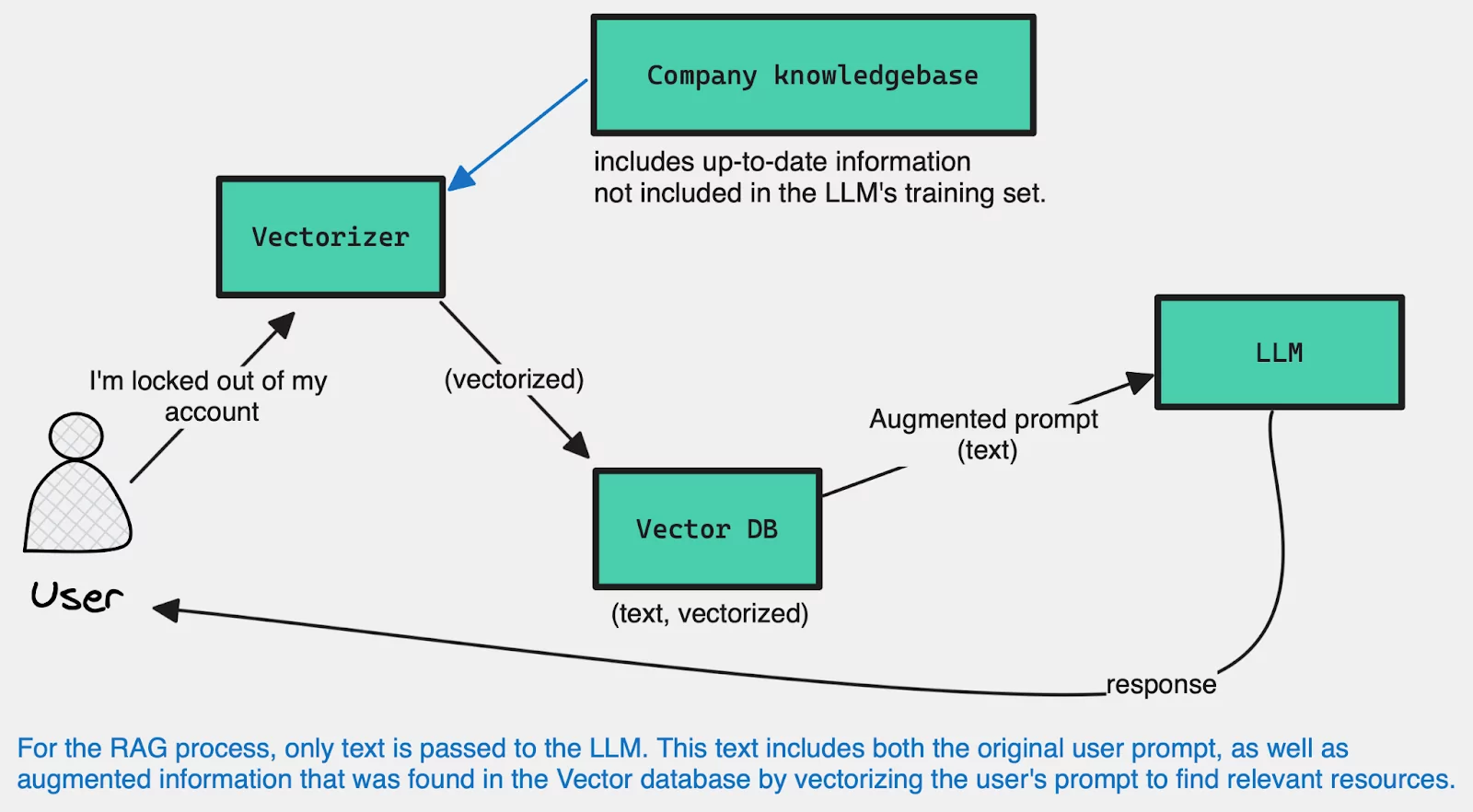

Retrieval-Augmented Generation (RAG) on documents is a technique that allows language models to access specific, relevant information from a pre-set document base. By using embeddings (numeric representations) of documents, RAG enables models to retrieve the most relevant pieces of information in response to a user query and generate a more accurate and context-rich answer.

Why Use RAG on Documents?

- Contextual Responses: RAG ensures that responses are grounded in specific information from a reliable document source.

- Accuracy and Consistency: By linking responses to existing knowledge documents, RAG enhances the quality and relevance of answers.

- Up-to-Date Knowledge: Even if a language model has static knowledge, RAG can pull in new or highly specific information that the model may not inherently contain.

How RAG Works with Document Embeddings

https://www.aporia.com/learn/understanding-the-role-of-embeddings-in-rag-llms/

https://www.aporia.com/learn/understanding-the-role-of-embeddings-in-rag-llms/

Step 1: Knowledge Documents are Converted into Embeddings

What are Embeddings?

Embeddings are numerical representations of documents or text passages that capture their semantic meaning. In RAG, each knowledge document is converted into an embedding, creating a structured database of information that can be searched by similarity.

Process:

- Document Collection: Gather relevant documents that contain information useful for the assistant’s responses (e.g., FAQs, policy manuals, research articles).

- Embedding Creation: Each document or text chunk is transformed into an embedding vector using a pre-trained model like BERT, GPT, or specialized embedding models. This embedding captures the document’s meaning in a high-dimensional space.

- Embedding Database: The embeddings are stored in a vector database (e.g., Pinecone, FAISS) where they can be efficiently searched and retrieved.

Example:

Consider a virtual assistant for a university. Relevant documents might include course catalogs, registration guides, or campus FAQs. Each document is converted into an embedding and stored in a vector database, ready for retrieval.

Step 2: A Vector is Generated from the User Query

Creating a Query Vector:

When a user inputs a query, it is also transformed into an embedding vector that represents the query’s meaning.

Process:

- User Query: The user asks a question, such as “What are the admission requirements for the Bachelor of Science program?”

- Query Embedding: The query is transformed into an embedding vector using the same model that was used to create document embeddings, ensuring compatibility.

- Representation in Vector Space: The query embedding represents the semantic content of the user’s question in a form that can be compared to document embeddings.

Example:

The question “What are the admission requirements for the Bachelor of Science program?” is converted into an embedding that captures the essence of “admission requirements” and “Bachelor of Science” within the vector space.

Step 3: Based on the Vector, the Most Relevant Embeddings are Retrieved

Retrieving Relevant Documents:

The query vector is compared to the document embeddings, and the most similar embeddings are retrieved. This similarity search allows the system to identify which documents best match the user’s question.

Process:

- Similarity Search: The vector database compares the query embedding to document embeddings and calculates similarity scores (often using cosine similarity).

- Top Matches: The system retrieves the top-scoring document embeddings, representing the most relevant documents or text passages.

- Context for Response Generation: These retrieved documents are provided to the language model as additional context, allowing it to generate a response grounded in the retrieved information.

Example:

For the query about “admission requirements,” the similarity search might retrieve a document section outlining admission criteria for undergraduate programs. This retrieved text provides context, ensuring that the assistant’s response is accurate and based on relevant information.

RAG Workflow Summary

- Document Embeddings Creation: Knowledge documents are converted into embeddings and stored in a vector database.

- User Query Embedding: A user’s question is transformed into an embedding that captures its meaning.

- Similarity Retrieval: The query embedding is matched against document embeddings, retrieving the most relevant documents for response generation.

Benefits of Using RAG on Documents

- Context-Enhanced Responses: Answers are based on specific, relevant documents rather than generalized model knowledge.

- Accuracy and Specificity: The assistant provides factually correct and precise information directly from document sources.

- Flexible and Updatable Knowledge Base: New documents can be added to the vector database, ensuring responses remain current without retraining the entire model.

Practical Application: Virtual Assistant with Document Retrieval

Scenario:

A virtual assistant for a company’s HR department that can answer questions about employee policies and benefits.

System Prompt:

“You are an HR assistant. Use company policy documents to answer questions accurately.”

User Query:

“What is the parental leave policy?”

RAG Response Workflow:

- The assistant converts the query into an embedding.

- The query embedding is matched to policy document embeddings in the vector database.

- The most relevant policy section on parental leave is retrieved and used to generate a precise, policy-compliant response.

Response Example:

“According to company policy, employees are entitled to 12 weeks of parental leave. For specific eligibility criteria, please refer to section 4 of the employee handbook.”

Key Takeaways

- Embeddings-Based Retrieval: RAG enables assistants to retrieve and use specific information from documents, enhancing response relevance.

- Versatile Knowledge Base: Document embeddings can be updated regularly, keeping the assistant’s responses up-to-date and domain-specific.

- Accuracy and Trust: Responses grounded in actual documents improve the accuracy and trustworthiness of virtual assistants, especially in sensitive fields like HR, healthcare, or customer support.

Additional Resources

- Vector Databases: Learn about vector databases such as Pinecone, FAISS, or Weaviate, which optimize retrieval efficiency for RAG.

- Embedding Models: Explore different embedding models (e.g., BERT, Sentence Transformers) for converting text into high-quality embeddings.

- Cosine Similarity and Vector Search: Understand similarity metrics and how they impact retrieval accuracy in RAG.